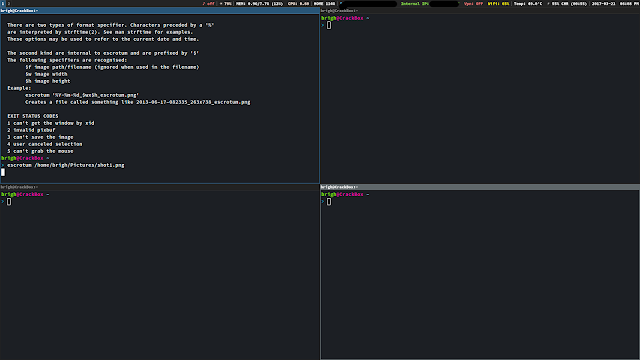

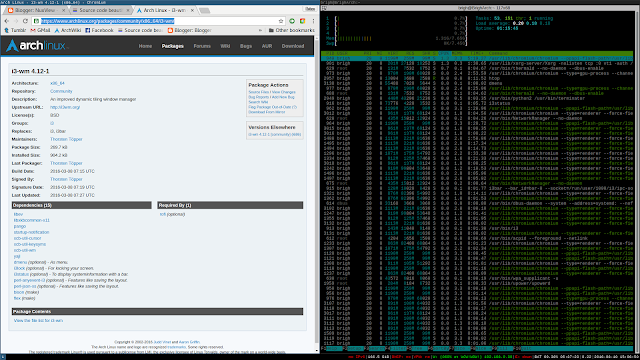

Downgrading:

Yes, I didn't learn how to do this until this past year. Though I've been using Arch for almost four years now, it was something I never needed (until I did, which of course forced me to learn). But more importantly, after learning how to downgrade packages, I discovered a nifty tool in the AUR which automates the hell out of this process.

The cleverly named 'downgrade' package in the AUR is a real time saver. It allows you to chose from a list of versions for any package in the official repositories. It also has the option to add said package to IgnorePkg, so whatever issue you ran into doesn't occur again.

| |

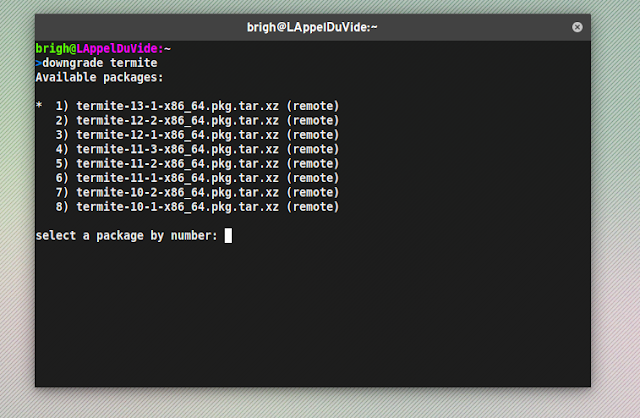

| downgrade in use |

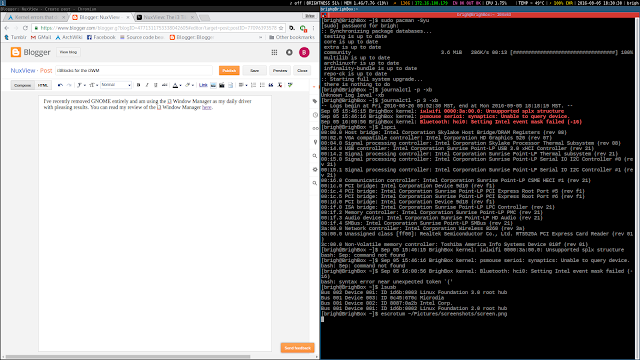

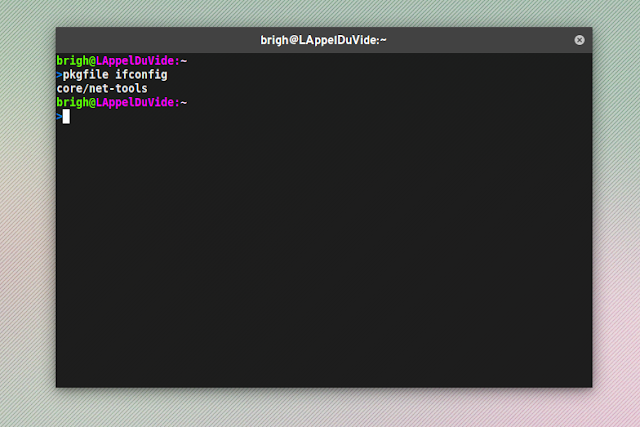

Dependencies:

While it isn't hard to pull up a browser and check to which package a binary belongs, 'pkgfile' (available in the official repositories) makes this so much easier. The usefulness of this tool really speaks for itself.

| |

| pkgfile in use |

Sed:

There's a good chance you're already familiar with 'sed'. If not, it stands for Stream EDitor and it's probably one of the most robust tools out there. I finally got around to figuring out how to use it. Not gonna lie, it looked like straight gibberish to me when I first encountered it. But now, it makes so much of my life so much easier.

If you're yet to learn it, head over to this page and start reading! It took reading this page carefully like six times and practicing using it a whole bunch to get the hang of it but its definitely worth the time investment.

Firefox:

In the past year, I've started using Firefox over Chromium. I heard that a new release was beautifully fast and smooth so I had to see so for myself.

It's true, it's objectively better. When I use pop over to Chromium to log into an account I can't remember the password for, it feels like Chromium is taking twice as long to load a page. I don't know what the Firefox dev team did but whatever it was, it's excellent. Good job y'all!

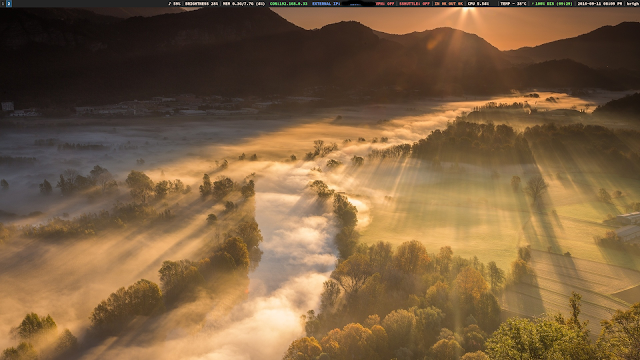

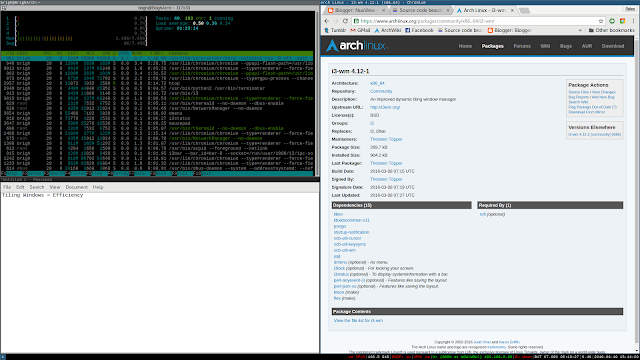

Desktop Environments:

I'm still using GNOME and i3. GNOME now uses Wayland by default which I'm pretty into but it's basically unusable without using GDM. I've never been a big fan of display managers. It doesn't make sense to me to take up background resources just to have a nice login screen. I enjoy the command line and logging in that way is my prefered choice. But you can't win them all.

Maybe I'll spend some time later figuring out how to circumvent GDM and still have everything working. We'll see I guess.

As for i3, it's still as great as it ever was. I even managed to get displaylink running with it so I can use my portable monitor.

Converting Manjaro to Arch:

Last semester, I needed to install Windows on my laptop so I could use a specific program for a class. It turns out that Windows needs to be installed first, then Arch second. Since it was the middle of the week and I needed to start using that program the next day, I installed Windows and then Manjaro instead of native Arch.

I was worried about time and automating the install process seemed like the smartest move. After using Manjaro for about two weeks, I came to realize that I was having some difficulty solving issues that I felt should be easily solvable. Though Manjaro is based on Arch, it's different enough to where I missed just having Arch on my laptop. I needed Arch back.

So I followed this guide to migrate Manjaro to Arch. I'm actually pretty proud of accomplishing this without a hitch. I skipped some steps and added some of my own and it was all said and done in less than 30 minutes.

Overall:

This was a good year for Linux and me, I feel we really grew closer. We laughed, we cried, and I went an entire year without completely botching my OS. Which is saying something because I've been messing with my system more now than ever.

10/10 would do it all again.